Stereoscopic dataset from a video game: detecting converged axes and perspective distortions in S3D videos

K. Malyshev, S. Lavrushkin, and D. Vatolin

Contact us:

- kirill.malyshev@graphics.cs.msu.ru

- sergey.lavrushkin@graphics.cs.msu.ru

- dmitriy.vatolin@graphics.cs.msu.ru

- video@compression.ru

Abstract

This paper presents a method for generating stereoscopic or multi-angle video frames using a computer game (Grand Theft Auto V). We developed a mod that captures synthetic frames allows us to create geometric distortions like those that occur in a real video. These distortions are the main cause of viewer discomfort when watching 3D movies. Datasets generated in this way can aid in solving problems related to machine-learning-based assessment of stereoscopic or multi-angle-video quality. We trained a convolutional neural network to evaluate perspective distortions and converged camera axes in stereoscopic video, then tested it on real 3D movies. The neural network discovered multiple examples of these distortions.

Key Features

- Dataset with a synthetic set of frame sequences from GTA V video game

- Suitable for stereoscopic video analysis and processing

- 4000 frames for the training set and 500 for the test set

- Pearson correlation coefficient is 0.956 for converged axes and 0.859 for perspective distortion

Mod description

We developed our own mod that captures synthetic frames in GTA V. It includes a set of parameters for adjusting the camera to generate stereoscopic sequences with various geometric distortions.

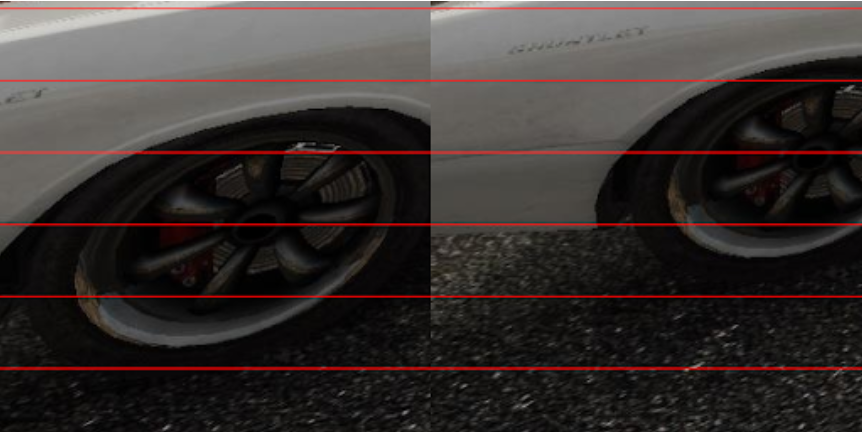

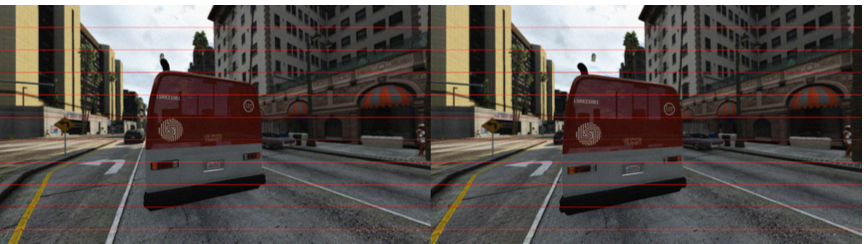

(a)Enlarged area

(a)Enlarged area

(b)Left and right images

(b)Left image

The result dataset was a total of 4,500 frames with a resolution of 1,920×1,080, most frames were modified with noise and/or blur.

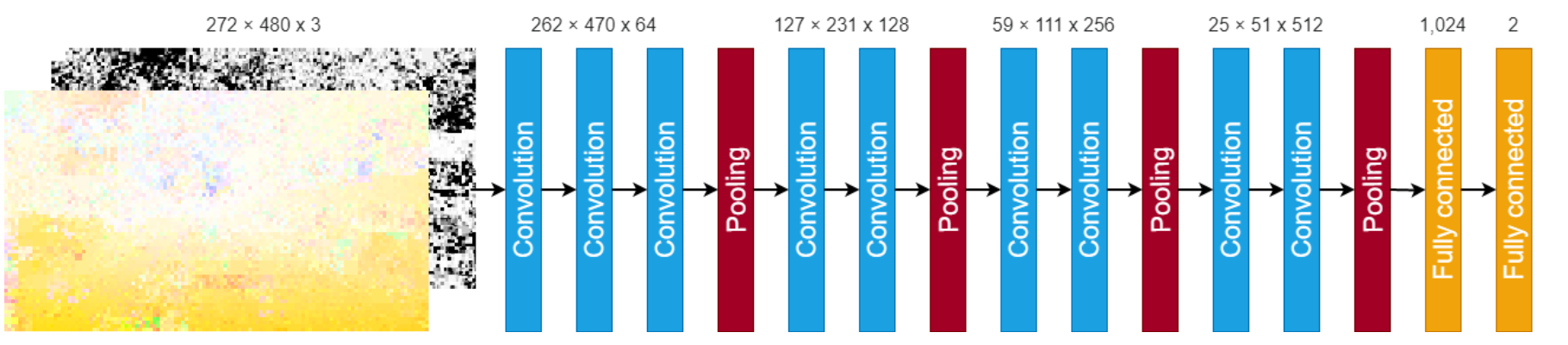

Model architecture

The model’s architecture that you can see below is a convolutional neural network. It evaluates perspective distortions and converged camera axes in stereoscopic video.

Schematic of trained convolutional neural network

Schematic of trained convolutional neural network

Results

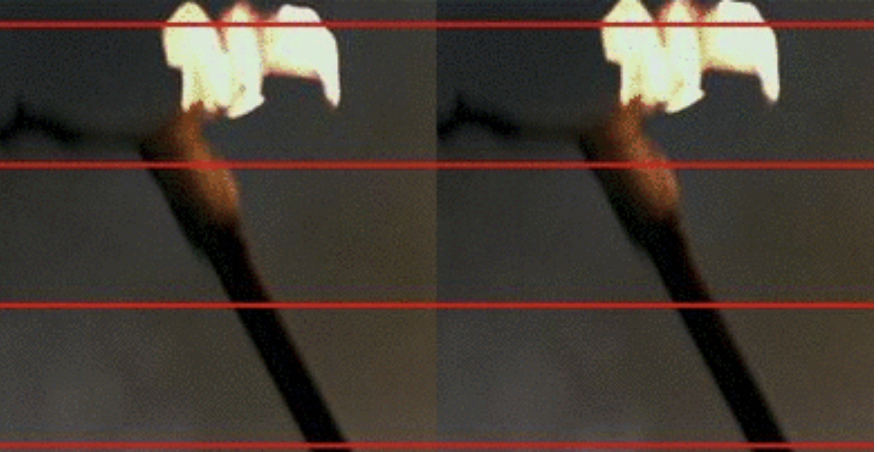

We also used the trained model to find distortion examples in stereoscopic films: Drive Angry and Pirates of the Caribbean: On Stranger Tides.

(a)Enlarged area

(a)Enlarged area

(b)Left and right images

(b)Left and right images

Cite us

@INPROCEEDINGS{9376375,

author={Malyshev, Kirill and Lavrushkin, Sergey and Vatolin, Dmitriy},

booktitle={2020 International Conference on 3D Immersion (IC3D)},

title={Stereoscopic Dataset from A Video Game: Detecting Converged Axes and Perspective Distortions in S3D Videos},

year={2020},

volume={},

number={},

pages={1-7},

doi={10.1109/IC3D51119.2020.9376375}}